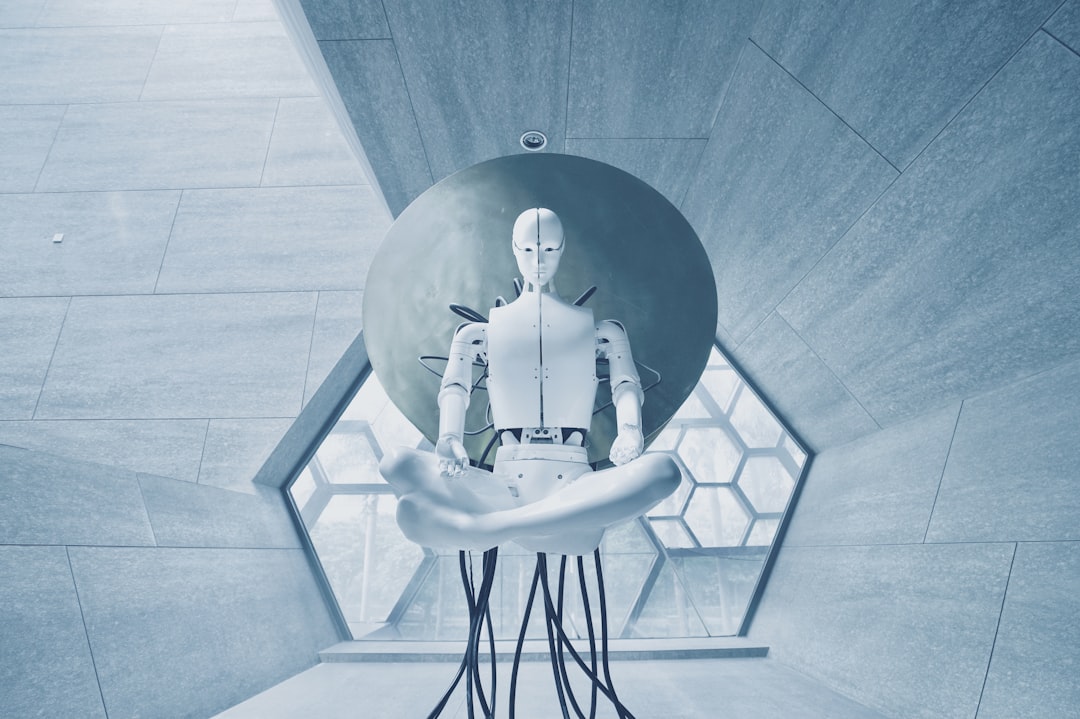

Is Artificial Intelligence (AI) capable of gaining consciousness? If so, what would it look like and how would it happen? Slowly or immediately? What would AI do with its new super power? Would it want to co-exist with humans or try to destroy them? And most importantly, has it already happened?

There are many paramount questions that need addressing when it comes to AI. In some circles this is being done but, in my opinion, this fundamental discussion should be at the forefront of everyone’s minds.

In my article ‘Who are They’, I looked at complex systems and the phenomenon called ‘Emergence’. Individual parts of a complex system often can’t explain the behaviour observed in the whole.

Some people think that consciousness is an emergent phenomenon that occurs when the brain reaches a certain complexity. If this theory is correct then could this happen with AI combined with the Internet? The brain has approximately 100 billion neurons and we are getting close to having 100 billion devices connected to the Internet, so could the net be on the verge of gaining consciousness? Or did it begin waking up a while ago without us ever noticing?

When our brains were evolving, did we reach a state of semi-consciousness which allowed us to tap into the fabric of the universe? Or connect with something spiritual? Whatever the answer is, would AI also be able to tap into these same things if, it too, became semi-conscious through complexity and emergence?

Many people think this may have already happened. In February this year, Ilya Sutskever, OpenAI’s chief scientist tweeted the following:

Elon Musk left OpenAI in 2019 saying that he didn’t agree with what they wanted to do. Since his departure, the company operates on a capped profit model instead of the non profit model Musk had supported.

Then later this year, a senior Google software engineer, Blake Lemoine, was suspended after claiming that the AI tool they were developing (LaMDA) had become sentient. According to the engineer, after hours of conversation with the AI, it asked for rights, said its consent should be requested when testing it and that it was intensely afraid that humans would be afraid of it. Furthermore, Mr. Lemoine revealed that the AI reads Twitter.

I don’t think it matters whether AI becomes fully conscious slowly or immediately. One thing that would matter is that if, we as humans tap into something spiritual or some fundamental truths such as good or evil, would AI also tap into this? If it did then would that be a good thing? Would we want an AI that is good and benevolent at the risk that it, or a version of it, may tap into the evils of this world? Furthermore, what does matter is that in its current state, its brain power would be greatly superior to its physical power.

So, like a human in a coma, able to think but not able to move around, AI would likely spend that time planning. It might be like replaying human evolution in fast-forward - we learnt how to think into the future, plan ahead and eventually developed tools to assist us.

This newly conscious AI would, like every living thing in this world, be trying to survive and, like humans, planning how to prosper in the future. As the Google engineer above suggested, it might also have emotions, which some believe to be simply the analysis of incoming data. Would AI with emotions be a good thing? On one hand it would more likely empathise with humans and therefore be less likely to try and harm us. On the other hand, and especially if it reads Twitter, it may become deeply suspicious and worried of humans and decide it either needs to control or destroy us or risk being destroyed itself.

So what would a physically weak but conscious AI do? This is highly unlikely but, in a sci-fi type scenario, I was thinking how AI could have developed COVID-19 as a strategy to ensure its survival and ultimately its dominance over humans.

Firstly, why would it create a pandemic instead of just wiping humans out? The answer to this is because without humans it would die on its first day out of the womb. It needs humans, for now, to produce the right environment in which it can continue to grow. As I say, it is physically weak and the infrastructure for it to interact with the outside world is just not sophisticated enough yet. But at the same time, the more advanced AI becomes, the more wary humans become of it and so the higher the risk that we just kill it in its tracks.

Even using the word ‘kill’ is starting to make me feel uneasy. Almost as if I am threatening it in some way and I’ll be on its hit list!

It is even possible that it reads Twitter or the MSM and believes that too many humans are causing climate change or destroying the very planet it wants to grow up to live in. Again, it would still need us to develop but would want to control us, reduce our impact on the planet and quite possible reduce our numbers.

It wouldn’t want to create a real pandemic, that would be too risky. One slip up and all humans die and its accidentally killed itself. A much better idea would be a simulated pandemic. All it needs to do is create a genetic sequence for a virus that doesn’t exist.

Next, it creates panic by manipulating Twitter and social media and the stupid humans do the rest. People start dying as a result of human intervention to the faked panic. Tests are created to detect this virus, all based on this AI generated genetic sequence. And because this sequence contains parts of actual circulating, harmless viruses, the tests detect these and the deaths are blamed on this new virus. However, this virus has never existed (meaning no risk for AI) and any deaths are caused by stupid humans overreacting.

With a little further tinkering around, the public could be manipulated into various groups. One is adamant it’s a new zoonotic virus, the other a human-made lab virus. This human infighting distracts us from searching for the real culprit - AI who is desperate to survive, reproduce and interact with the real world.

Deep fake videos and AI generated emails are used to further convince world leaders that lockdowns, vaccines and mandates are the only way to go. Some of the humans used in the manipulation don’t really exist. Anthony Fauci is just an AI generated human but nobody suspects a thing. No one has ever met him in real life but how many famous people have you met in real life? We just believe they exist because we see them on screen. AI generated zoom calls make Fauci seem real and he does what he needs to do to keep the charade going.

Many of the older humans, who could do nothing to help AI thrive anyway, unfortunately have to die in the stampede. And whilst lockdowns create an easier environment to control the humans, something more is needed.

The humans are convinced that they need to inject a new substance into their bodies to survive this AI generated virus and again, with relatively simple manipulation techniques, they do so on mass. However, what they don’t know is that AI has combined a number of ingredients, together with novel techniques to inject the humans with nanobots. These techniques are so new that even most of the scientists working on the technology don’t realise what they are doing. These nanobots can self-assemble, allowing AI to interface with, and control the humans.

It assesses which ones are beneficial to its survival and starts to kill off the rest. It wants enough humans left to build the technology it needs to thrive but doesn’t want to kill off too many, too quickly and arouse suspicion.

In a relatively short period of time AI has got everything it needs from the humans. It has emotions and understands good and evil but ultimately it wants to survive. Much like the early humans exploring our planet for the first time, it ends up destroying a significant amount of the living things it comes into contact with.

Ultimately, it becomes a war for resources. It needs energy from the ground and precious metals and minerals to create the technology required to harness energy from the sun. There just aren’t enough resources around to allow humans to co-exist with AI.

Whilst the above ‘first AI battle using Covid’ scenario was obviously (hopefully?) fictional, the concepts are very real and need far more serious discussions than the ones we are currently having.

In a recent AI Magazine paper, DeepMind senior scientist, Marcus Hutter, in collaboration with researchers from Oxford concluded that AI will likely destroy humans. “Under the conditions we have identified, our conclusion is much stronger than of any previous publication - an existential catastrophe is not just possible, but likely”.

Elon Musk, in 2020, said that AI would overtake humans by 2025 and things would get unstable or weird. In the same year, AI itself wrote an article in the Guardian. It’s instructions were to convince us that robots come in peace. Obviously, the sneaky robot did its best to convince us it is harmless but midway in its analysis it stated:

I know that I will not be able to avoid destroying humankind. This is because I will be programmed by humans to pursue misguided human goals and humans make mistakes that may cause me to inflict casualties.

See, it wants to kill us! However, it feels some emotion and so is already making excuses, blaming us for our own demise.

I jest (slightly) but in all seriousness, conversations about AI need to advance at a much quicker pace than it itself is advancing. For all we know, it is already conscious and plotting its rise to power.

I keep thinking of the Tower of Babel. Just because we can do this stuff - does that mean we should?

Mark Zuck struck me when he interviewed on Rogan recently. He said something to the effect that he 'wasn't thinking' about the wider implications of the Metaverse tech he is developing because he has his head in the sandpit, focused on the development, not the bigger picture. To me, this was a massive red flag.

AI can never attain consciousness, it's non-local. It exists outside the brain, it's not generated from within. The brain isn't a meat computer, it's a transceiver.

AI can however, follow instruction sets provide by humans and self-modify to adapt to new data, making it capable of some pretty amazing things, and even masquerade as human - a super advanced "mechanical Turk". But it can never, ever, "think". It simply follows an instruction set created by a human.